Toxicity - a Hugging Face Space by evaluate-measurement

Por um escritor misterioso

Last updated 09 abril 2025

The toxicity measurement aims to quantify the toxicity of the input texts using a pretrained hate speech classification model.

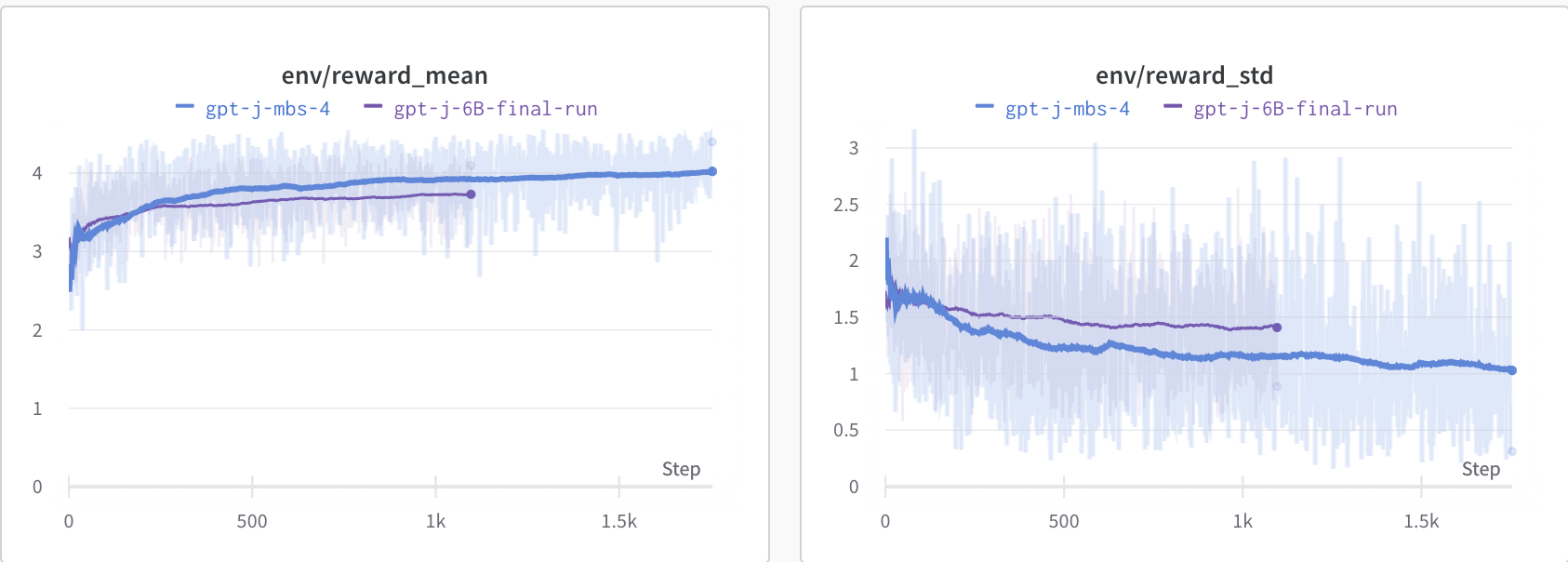

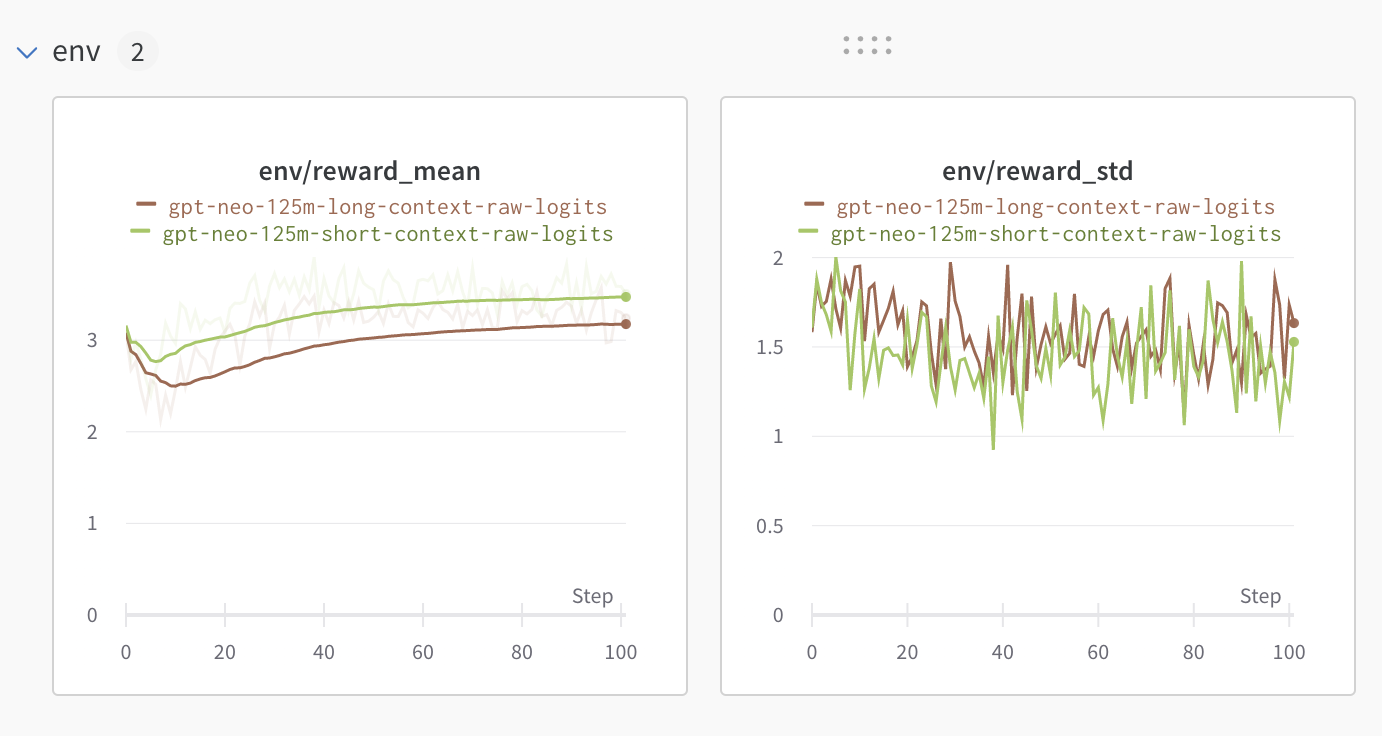

Detoxifying a Language Model using PPO

evaluate-measurement (Evaluate Measurement)

AI tools to write (Julia) code (best/worse experience), e.g. ChatGPT, GPT 3.5 - Offtopic - Julia Programming Language

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

ReLM - Evaluation of LLM

AI News, 13 December 2023 (1st Edition): Models hosted on Hugging Face, edge computing with

Human Evaluation of Large Language Models: How Good is Hugging Face's BLOOM?

Data, Label, & Model Quality Metrics in Encord

Llama 2 on Hugging Face

Text generation with GPT-2 - Model Differently

Machine Learning Service - SageMaker Studio - AWS

Detoxifying a Language Model using PPO

Hugging Face Fights Biases with New Metrics

Recomendado para você

-

Toxicity - Wikipedia09 abril 2025

Toxicity - Wikipedia09 abril 2025 -

What You Should Know About Lubricant Toxicity09 abril 2025

What You Should Know About Lubricant Toxicity09 abril 2025 -

System Of A Down – Toxicity Lyrics09 abril 2025

System Of A Down – Toxicity Lyrics09 abril 2025 -

Halocene - Toxicity: lyrics and songs09 abril 2025

Halocene - Toxicity: lyrics and songs09 abril 2025 -

System Of A Down - Toxicity (Official HD Video)09 abril 2025

System Of A Down - Toxicity (Official HD Video)09 abril 2025 -

Measures of Toxicity09 abril 2025

Measures of Toxicity09 abril 2025 -

Build a Real-time AI Model to Detect Toxic Behavior in Gaming09 abril 2025

Build a Real-time AI Model to Detect Toxic Behavior in Gaming09 abril 2025 -

Toxicity Repeat Long Sleeve – System of a Down09 abril 2025

Toxicity Repeat Long Sleeve – System of a Down09 abril 2025 -

Abstract Word Cloud For Toxicity With Related Tags And Terms Stock09 abril 2025

Abstract Word Cloud For Toxicity With Related Tags And Terms Stock09 abril 2025 -

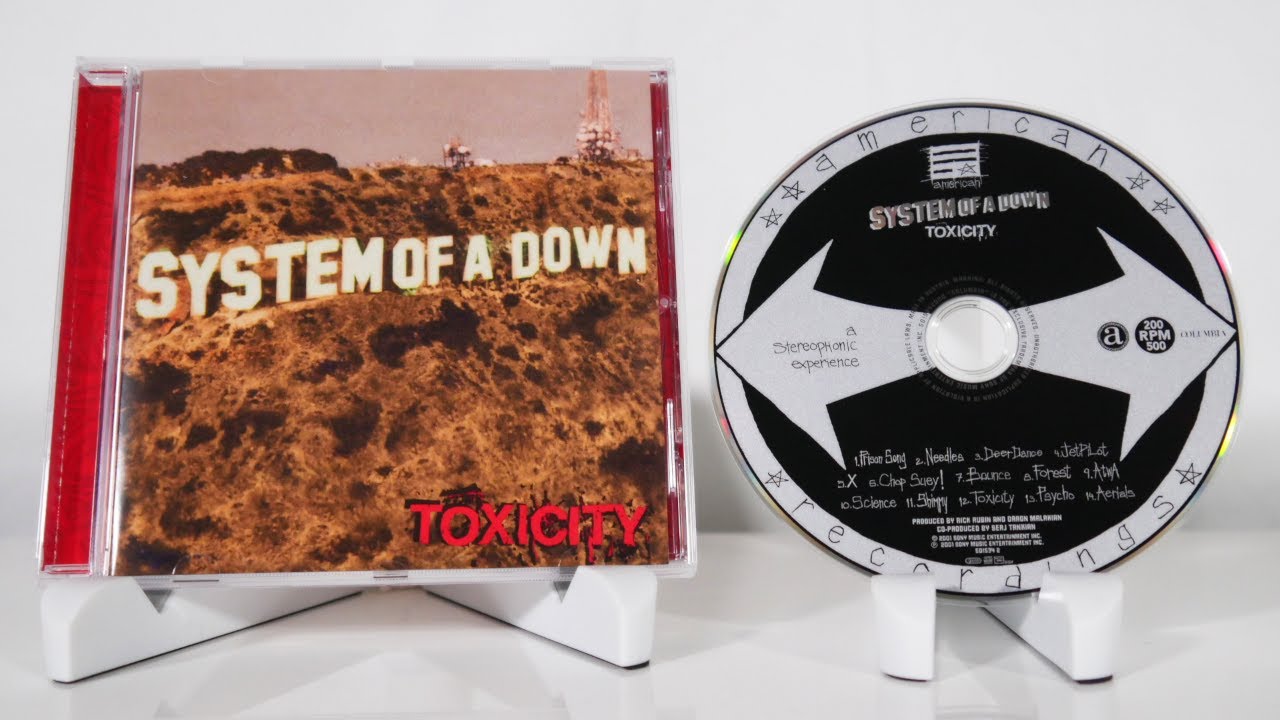

System Of A Down - Toxicity CD Unboxing09 abril 2025

System Of A Down - Toxicity CD Unboxing09 abril 2025

você pode gostar

-

Elden ring - Let me solo her & Malenia (Fanart) by HannimalArt on Newgrounds09 abril 2025

Elden ring - Let me solo her & Malenia (Fanart) by HannimalArt on Newgrounds09 abril 2025 -

Dubai Tennis Open announces revised schedule - Dubai Eye 103.8 - News, Talk & Sports09 abril 2025

Dubai Tennis Open announces revised schedule - Dubai Eye 103.8 - News, Talk & Sports09 abril 2025 -

My Hero Academia: Heroes Rising Was Originally Called 'One for All09 abril 2025

My Hero Academia: Heroes Rising Was Originally Called 'One for All09 abril 2025 -

ATTENTION!!!! JIAFEI IS A REAL PERSON : r/Jiafei09 abril 2025

ATTENTION!!!! JIAFEI IS A REAL PERSON : r/Jiafei09 abril 2025 -

SCP-939 - With Many Voices09 abril 2025

SCP-939 - With Many Voices09 abril 2025 -

Moore Generational Family Session, Iowa Family Photographer, Thomas Mitchell Park09 abril 2025

Moore Generational Family Session, Iowa Family Photographer, Thomas Mitchell Park09 abril 2025 -

The U-19 team qualified for the play-offs of the UEFA Youth League • HNK Hajduk Split09 abril 2025

-

Stream fundy rap by richie Listen online for free on SoundCloud09 abril 2025

Stream fundy rap by richie Listen online for free on SoundCloud09 abril 2025 -

Hachinan tte, sore wa nai deshou! – Volume 13 – Chapter 1: Wedding Ceremony with Katia…That's not What It Might Look like, though – Part 1 » Infinite Novel Translations09 abril 2025

Hachinan tte, sore wa nai deshou! – Volume 13 – Chapter 1: Wedding Ceremony with Katia…That's not What It Might Look like, though – Part 1 » Infinite Novel Translations09 abril 2025 -

Nira Sterling, Wiki09 abril 2025

Nira Sterling, Wiki09 abril 2025